J’avais un problème avec le soft : https://github.com/Dryusdan/garmin-to-fittrackee .

En fait c’était un problème de configuration sur apache2 (qui me permet de faire la gestion des certificats), j’ai donc fait :

# /usr/sbin/a2enmod headers

Enabling module headers.

To activate the new configuration, you need to run:

systemctl restart apache2

Ajout d’un paramètre dans mon fichier de conf

<VirtualHost>

<IfModule>

...

RequestHeader set X-Forwarded-Proto "https"

...

ErrorLog ${APACHE_LOG_DIR}/error.fit-ssl.log

CustomLog ${APACHE_LOG_DIR}/access.fit-ssl.log combined

...

Include /etc/letsencrypt/options-ssl-apache.conf

SSLCertificateFile /etc/letsencrypt/live/fit.cyber-neurones.org/fullchain.pem

SSLCertificateKeyFile /etc/letsencrypt/live/fit.cyber-neurones.org/privkey.pem

</VirtualHost>

</IfModule>

Pour finir :

# systemctl restart apache2

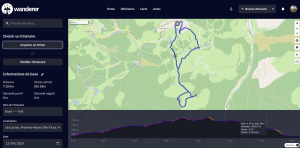

Ensuite j’ai fait la synchro, pas de problème :

# garmin2fittrackee setup fittrackee

Client id: chutunsecret

Client secret:

Fittrackee domain: fit.cyber-neurones.org

Please go to

https://fit.cyber-neurones.org/profile/apps/authorize?response_type=.... and authorize access.

Enter the full callback URL from the browser address barafter you are redirected and press : https://localhost/?code=....

[04/27/25 17:13:33] INFO Logging successfull. Saving configuration fittrackee __web_application_flow

Merci à :

– https://social.dryusdan.fr/@Dryusdan

– https://fosstodon.org/@FitTrackee

Et merci à la doc : https://docs.fittrackee.org/en/oauth.html#flow même si sur la doc il est question plus de NGNIX que d’APACHE2.

Bref le problème c’était moi.